The Megapower of Microtargeting

The 2016 US presidential election marked a turning point in how political campaigns interact with voters. Donald Trump’s victory wasn’t just about traditional political strategies — it also depended on the innovative and controversial use of digital advertising. Behind the scenes, a little-known company called Cambridge Analytica played an important role in Trump’s campaign. This company used large amounts of personal data harvested from millions of Facebook users to create highly targeted political ads designed to influence voters’ behaviour.

Cambridge Analytica was founded as a private intelligence company and self-described as a “global election management agency”, to combine behavioural psychology with data analytics. At the core of their strategy was the work of Cambridge University researcher Michal Kosinski, whose research on predicting personality traits from digital records [1] laid the foundation for the company’s methods. By analysing Facebook likes, Cambridge Analytica could build detailed profiles, which were then used to microtarget voters with tailored content. But how exactly did they use the data to influence elections, and what did it mean for the future of advertising? In this article, we’ll delve into the rise of microtargeting, how it shaped the 2016 election, and its lasting implications on how political campaigns — and advertisers — use data to sway public opinion.

Microtargeting

Microtargeting represents a shift in how (political) advertising is conducted, using large amounts of personal data to tailor messages that resonate deeply with the people. Microtargeting involves analysing data points, from demographic information to online behaviour–particularly social media activity — to segment the electorate into finely tuned categories. This allows campaigns to deliver highly personalised advertisements that influence specific personalities and behaviours. The foundations of microtargeting were laid by advancements in data analytics and behavioural psychology, notably through the research of Michal Kosinski and his colleagues at the University of Cambridge.

At its core, microtargeting utilises data harvested from various digital sources, with Facebook serving as a prime example due to its extensive user base and the amount of information users voluntarily share. Cambridge Analytica’s approach was to analyse over 58,000 Facebook profiles, including users’ likes, which served as indicators of their preferences, beliefs, and even psychological traits. By employing machine learning techniques, they could predict sensitive personal attributes such as sexual orientation, political affiliation, and personality traits with startling accuracy. For instance, the study highlighted that the accuracy of predicting sexual orientation reached 88% among males and 75% among females, while ethnic origin could be classified correctly 95% of the time. Furthermore, liking ‘curly fries’ was a sign of high intelligence (wink to all curly fry lovers out there).

This ability to make accurate predictions based on seemingly useless data — such as Facebook likes on random posts — enabled campaigns to craft messages that were not only relevant but also emotionally resonant with target audiences. For example, users who expressed a love for certain types of music could receive ads with their favourite song as the background track, effectively creating the impression of some deep connection between the campaign and the voter.

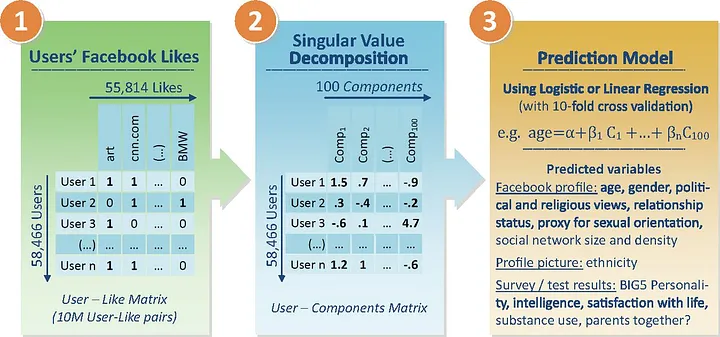

In his paper, Kosinsky explains how simple this conversion of likes to private traits is. Users and their likes are organised in a sparse matrix, with each row representing a user and each column corresponding to a post. An entry of ‘1’ in the matrix indicates that a user has liked a specific post, while a ‘0’ denotes that they have not. This extensive sparse matrix is then analysed using a dimensionality reduction algorithm known as singular value decomposition. This technique is similar to those employed in recommender systems used by platforms like Netflix and YouTube, but instead of identifying new content for users, the focus is on uncovering patterns of similarity among users. By integrating this data with the results from a “personality test” that some users completed, researchers can predict private information about users — information they have not previously shared online. Figure 1 illustrates this process.

Worth mentioning is the unethical manner in which the access to Facebook data trusted to Kosinsky, only intended for academic use, was misused by the researcher to collaborate with Cambridge Analytica, helping them gain access to otherwise private information [2]. The technique was then further iterated by the company to create tools for election campaign management.

The 2016 US presidential election

While microtargeting started its rise already around 2013, during the 2016 US presidential election, it was employed on an unprecedented scale. Cambridge Analytica, working closely with the Trump campaign, utilised data analytics to identify key voter segments in battleground states. They developed profiles that highlighted not just demographic information, but also psychological predispositions. This allowed the campaign to show messages that appealed to voters’ emotions rather than just beliefs [3].

For example, one targeted ad campaign might focus on economic anxiety among working-class voters, specifically addressing concerns about job security and the effects of globalisation. Another might seek to swing conservative voters by emphasising themes of nationalism and traditional values. By delivering these messages through carefully selected online platforms, the Trump campaign aimed to maximise the impact of their advertising spend.

The results of this strategy were evident in the election outcomes. In states like Wisconsin, Michigan, and Pennsylvania, where the margins of victory were razor-thin, microtargeted ads could have played a crucial role in convincing undecided voters and keeping the commitment of loyal supporters.

Ethical considerations and the future of advertising

The rise of microtargeting has raised ethical questions regarding privacy, consent, and the manipulation of personal data. The use of platforms like Facebook to gather data without explicit user consent has sparked debates about the limits of data usage in political campaigning. Additionally, the potential for misinformation and the creation of echo chambers — where users are only exposed to views that reinforce their own — poses risks to democratic discourse.

As technology continues to evolve, so too will the strategies employed in microtargeting. The implications extend beyond politics into the broader advertising industry, where personalised marketing is becoming the norm. Companies are increasingly adopting similar tactics to enhance customer engagement and drive sales, blurring the lines between political and commercial advertising. The American retail chain Target, for example, was able to predict the pregnancy of their customers before they knew it themselves, by simply utilising their shopping data and then recommending pregnancy products [4].

In conclusion, microtargeting has transformed the landscape of political campaigning and global marketing, culminating in the 2016 election. As both political entities and corporations alike discover the power of data analytics, the challenge remains to navigate the ethical implications while ensuring that user privacy and democratic processes are respected and protected.

This article was published in “Machazine” 2024–2025 Q2 edition, the magazine of the Study Association W.I.S.V. Christiaan Huygens (TU Delft)

References

[1] Kosinski, M., Stillwell, D., & Graepel, T. (2013). Private traits and attributes are predictable from digital records of human behavior. Proceedings of the National Academy of Sciences, 110(15), 5802–5805. https://doi.org/10.1073/pnas.1218772110

[2] Cadwalladr, C., & Graham-Harrison, E. (2018, March 25). How Cambridge Analytica turned Facebook ‘likes’ into a lucrative political tool. The Guardian. https://www.theguardian.com/technology/2018/mar/17/facebook-cambridge-analytica-kogan-data-algorithm

[3] Delcker, J. (2020, January 28). POLITICO AI: Decoded: How Cambridge Analytica used AI — No, Google didn’t call for a ban on face recognition — Restricting AI exports. POLITICO. https://www.politico.eu/newsletter/ai-decoded/politico-ai-decoded-how-cambridge-analytica-used-ai-no-google-didnt-call-for-a-ban-on-face-recognition-restricting-ai-exports/

[4] Duhigg, C. (2012). The power of habit: Why we do what we do in life and business (Vol. 34, №10). Random House.